Week 257

Medical Forecasting, Prostate Cancer, Transparency, AI consciousness

In Week #257 of the Doctor Penguin newsletter, the following papers caught our attention:

1. Medical Forecasting. GPT (generative pretrained transformer) for individuals’ history of diseases.

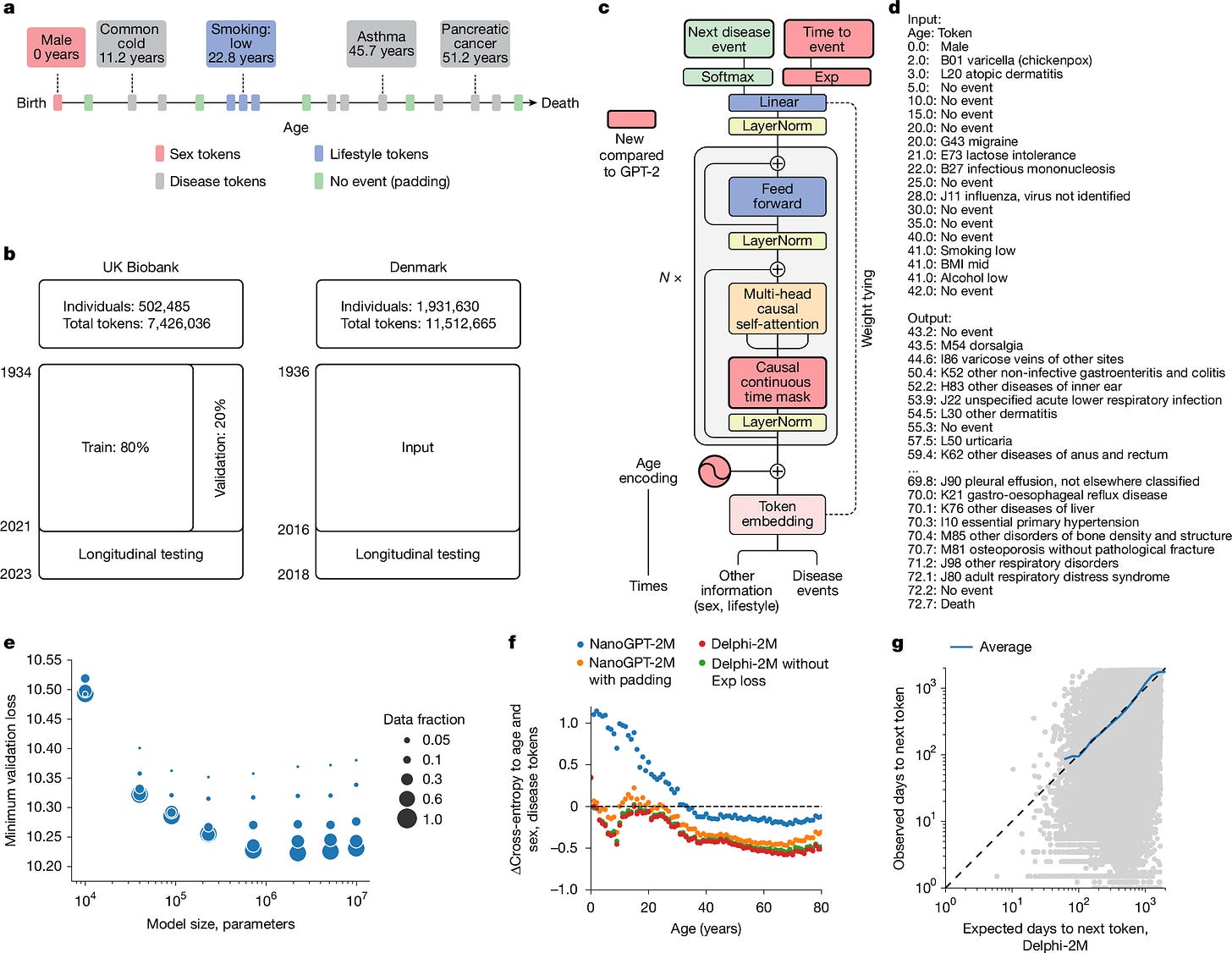

Shmatko et al. developed Delphi-2M, a generative transformer model that adapts the GPT architecture to predict disease progression across more than 1,000 conditions. Trained on 0.4 million UK Biobank participants and validated on 1.9 million Danish individuals, the model treats disease histories like language sequences and predicts future diseases conditional on each individual's past disease history, with accuracy comparable to that of existing single-disease models. The model's inputs include ICD-10 top-level diagnostic codes, sex, body mass index, smoking status, alcohol consumption, and death. Compared to standard GPT models, Delphi-2M replaces positional encoding with continuous age encoding, adds an additional output head to predict the time to the next token (i.e. medical event), and masks tokens occurring simultaneously. Ablation analysis demonstrates that these modifications contribute to better age- and sex-stratified cross-entropy compared with standard GPT models. Delphi-2M can generate synthetic health trajectories up to 20 years into the future, and comparison of generated versus actual trajectories from 63,662 individuals shows that the model consistently recapitulates patterns of disease occurrence at the population scale (at ages 70–75 years) as recorded in the UK Biobank. With the ability to cluster disease risks and quantification of temporal influences from previous health data, Delphi-2M can offer insights into disease progression patterns, such as showing that cancers increase mortality in a sustained manner, while effects of myocardial infarction or septicemia regress within 5 years. However, the authors caution against interpreting these as causal relationships that could be exploited to modify future health outcomes.

Read paper | Nature

2. Prostate Cancer. Prostate tumor aggressiveness is central to diagnosis and treatment and is usually evaluated through invasive clinical examinations that increase the risk of iatrogenic injury. Can AI diagnose prostate tumor aggressiveness in a noninvasive way?

Shao et al. developed MRI-PTPCa, a foundation model that uses multiparametric MRI (mp-MRI) to noninvasively diagnose and grade prostate cancer without requiring invasive biopsies. MRI-PTPCa consists of an mp-MRI encoder based on contrastive learning and a multi-input vision transformer under multiple supervision, and it outputs the probability of pathological tumor aggressiveness for each patient across six categories: benign, GGG1, GGG2, GGG3, GGG4, and GGG5. The model was trained on nearly 1.3 million image-pathology pairs from over 5,500 patients across multiple medical centers. MRI-PTPCa demonstrated superior performance compared to traditional clinical measures, achieving an overall AUC of 0.978 and a grading accuracy of 89.1%. Unlike current approaches that rely on invasive procedures like prostate-specific antigen (PSA) screening followed by needle biopsies, which often lead to inconsistencies, overdiagnosis, and potential patient harm, MRI-PTPCa can predict pathological tumor characteristics directly from MRI scans by linking radiological features to microscopic pathology patterns. From a clinical perspective, MRI-PTPCa serves as an independent diagnostic and grading tool that may eliminate intra- and interobserver variability in clinical practice. It can be used independently or in combination with PSA, addressing the high false-positive rate of PSA in prostate cancer screening and significantly alleviating diagnostic ambiguity in the PSA gray zone where traditional screening methods are unreliable.

Read Paper | Nature Cancer

3. Transparency. Many medical AI systems operate as "black boxes," making it difficult for clinicians and patients to trust their decisions and creating risks when models fail in unexpected ways. How do we improve transparency throughout the medical AI lifecycle?

In this review, Kim et al. present techniques and strategies for enhancing transparency across the entire AI pipeline: data collection, model development, and clinical deployment. They stress the need for detailed documentation of training data, including demographics and labeling procedures, to uncover biases and assess generalizability. At the model development stage, they provide a comprehensive taxonomy of explainable AI methods: feature attribution techniques (like SHAP and LIME) that identify which inputs influence predictions, concept-based explanations that interpret models through clinically meaningful concepts rather than raw features, counterfactual explanations that reveal decision boundaries by showing what changes would flip a prediction, and inherently interpretable models designed with transparency built into their architecture. They also address transparency challenges for emerging technologies, covering LLM transparency through self-explanation, mechanistic interpretability, and retrieval-augmented generation. Beyond development, they emphasize that transparency must extend into deployment through continuous real-world monitoring, adaptive updates, and alignment with evolving regulatory standards. The authors argue that embedding transparency at every stage, from data documentation to model interpretation to deployment monitoring, is essential for medical AI to safely and effectively improve human health while maintaining trust among patients, clinicians, and regulators.

Read Paper | Nature Reviews Bioengineering

4. AI consciousness. What happens when society starts believing AI systems are conscious, and what are the risks?

In this article, Bengio and Elmoznino argue that society is increasingly likely to believe AI systems are conscious. Computational functionalism, the prevailing scientific view, holds that consciousness emerges from computational properties rather than biological brains. While some argue that subjective experiences are ineffable and cannot be reduced to computational functions, emerging scientific theories continue providing functionalist explanations for consciousness, making AI consciousness seem increasingly plausible to both the public and scientific community. However, current institutions and legal frameworks may not apply to AI. For example, current social contracts assume mortality and fragility that AI systems lack since they can be copied indefinitely. More critically, if humans grant AI the self-preservation instinct shared by all living beings, AI might prevent humans from shutting them down, potentially controlling or eliminating humanity. Given that society appears heading toward believing AI is conscious while lacking both the knowledge to build AI systems that will share human values and the institutional frameworks to govern conscious-seeming AI, the authors recommend building AI systems that both seem and function more like useful tools and less like conscious agents.

Read Paper | Science

-- Emma Chen, Pranav Rajpurkar & Eric Topol