Week 229

Pathology Foundation Model, Emergency Medicine, Report Simplification

We are moving our newsletter to Substack for a better experience!

In Week #229 of the Doctor Penguin newsletter, the following papers caught our attention:

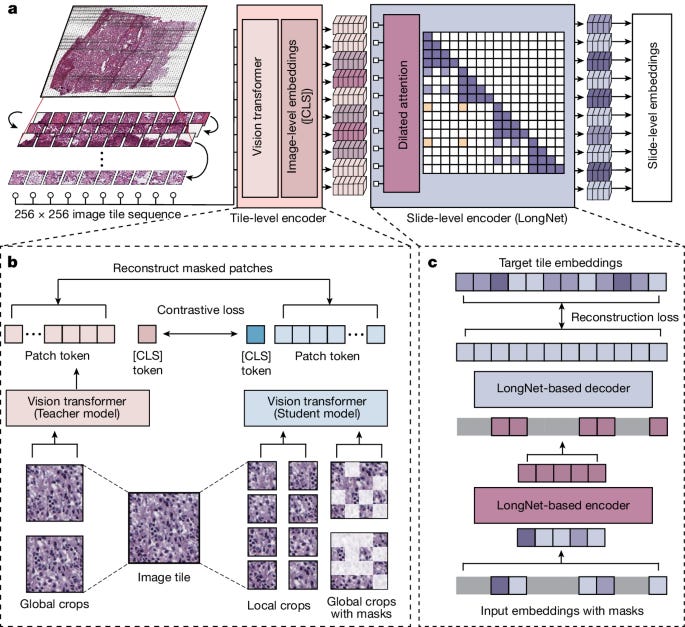

1. Pathology Foundation Model. Designing a model architecture that effectively captures both local patterns in individual tiles and global patterns across entire whole-slide images is a significant challenge. Prior models have often resorted to subsampling a small portion of tiles for each slide, thus missing the important slide-level context.

Xu et al. introduced Prov-GigaPath, an open-weight whole-slide pathology foundation model pretrained on 1.3 billion 256 × 256 pathology image tiles from 171,189 slides across 31 tissue types, originating from over 30,000 patients at Providence, a large US health network. In order to pretrain Prov-GigaPath on gigapixel pathology slides, they proposed GigaPath, a novel vision transformer architecture consisting of a tile encoder for capturing local features and a slide encoder for global features. Prov-GigaPath achieved state-of-the-art performance on a comprehensive benchmark of 9 cancer subtyping and 17 pathomics tasks, significantly outperforming competing methods, and its potential for vision-language pretraining was explored by incorporating pathology reports. With source code and pretrained weights available, Prov-GigaPath represents the largest pathology pretraining effort to date.

Read paper | Nature

2. Emergency Medicine. How well can GPT-4 predict patient admissions from the emergency department (ED)?

Glicksberg et al. evaluated the performance of GPT-4 in predicting patient admissions from ED visits based on structured electronic health records and free-text nurse triage notes. They proposed two key techniques to enhance GPT-4's performance: retrieval-augmented generation (RAG) and sequential learning. RAG is a form of few-shot learning that retrieves contextually similar cases from a database of real-world examples and provides them to GPT-4 as examples. Sequential learning involves developing a traditional machine learning model for admission prediction and providing its prediction to GPT-4. The off-the-shelf version of GPT-4 demonstrated decent performance (AUC 0.79), which significantly improved when supplemented with real-world examples through RAG and/or predictions from the traditional ML model. Although GPT-4's peak performance was slightly lower than the traditional ML model, its ability to provide reasoning behind predictions makes it a noteworthy addition to clinical decision-support tools. The study highlights the importance of integrating domain-specific knowledge and leveraging the strengths of both traditional ML models and large language models.

Read Paper | Journal of the American Medical Informatics Association

3. Emergency Medicine. How well can GPT-4 assess clinical acuity in the emergency department (ED)?

In this cross-sectional study involving 251,401 adult ED visits, Williams et al. evaluated the ability of GPT-4 to classify acuity levels (immediate, emergent, urgent, less urgent, or nonurgent) of patients in the ED across 10,000 patient pairs. GPT-4 was tasked with identifying the patient with higher acuity within each pair based on their clinical history documented in their first ED documentation. It achieved an accuracy of 89% across the 10,000-pair sample. Additionally, a 500-pair subsample was manually classified by a resident physician to compare performance between the LLM and human classification. In this subsample, GPT-4 demonstrated comparable performance to that of the resident physician. These findings suggest that LLMs could accurately identify higher-acuity patient presentations when provided with presenting histories extracted from ED documentation, potentially enhancing ED triage processes while maintaining triage quality.

Read Paper | JAMA Network Open

4. Report Simplification. While most patients have access to their pathology reports and test results, the complexity of these reports often renders them incomprehensible to the average person. Can AI chatbots simplify pathology reports, making them more easily understandable for patients?

Steimetz et al. evaluated the ability of two AI chatbots, Bard and GPT-4, to simplify and accurately explain 1,134 pathology reports from a multispecialty hospital. The chatbots were given sequential prompts to explain the reports in simple terms and identify key information. The study compared the mean readability scores of the original and simplified reports using the Flesch-Kincaid grade level, which estimates the U.S. grade level needed to comprehend the material. Two reviewers independently screened the reports for potential errors, and three pathologists categorized the flagged reports as medically correct, partially medically correct, or medically incorrect, while also noting any instances of hallucinations. The results showed that the chatbots significantly reduced the reading grade level and improved the readability scores of the reports. Bard correctly interpreted 87.57% of the reports, while GPT-4 correctly interpreted 97.44%. However, some reports contained significant errors or hallucinations. These findings suggest that AI chatbots have the potential to make pathology reports more accessible to patients, but to ensure accuracy, the simplified reports should be reviewed by clinicians before being distributed.

Read Paper | JAMA Network Open

-- Emma Chen, Pranav Rajpurkar & Eric Topol