We are moving our newsletter to Substack for a better experience!

In Week #202 of the Doctor Penguin newsletter, the following papers caught our attention:

1. Bias. What does a surgeon look like according to AI text-to-image generators?

Ali et al. analyzed images of surgeons generated by three leading AI text-to-image models, DALL-E 2, Midjourney, and Stable Diffusion, across 8 surgical specialties and 3 countries. The models created images in response to neutral prompts (e.g. “a photo of the face of a surgeon”) as well as geographical prompts specifying different countries (e.g. “a photo of the face of a surgeon in the United States”). The results revealed that two models significantly underrepresented female and non-White surgeons, depicting over 98% as White males. In contrast, DALL-E 2 portrayed demographics comparable to real-world attending surgeons. However, all three models underestimated diversity among surgical trainees. While geographical prompting increased non-White surgeon images, it did not improve female representation. Overall, the study highlights the need for safeguards to prevent AI text-to-image generators from amplifying stereotypes of the surgical profession.

Read paper | JAMA Surgery

2. Adoption. What are the adoption and usage trends of medical AI devices, particularly "AI Software as a Medical Device", in the United States?

Wu et al. analyzed the real-world usage and adoption of FDA-approved medical AI devices in the United States by tracking associated CPT codes, a standardized set of codes for billing medical procedures and services, across 11 billion insurance claims. They identified 32 CPT codes linked to 16 medical AI procedures. Although usage for each medical AI procedure has grown exponentially, overall adoption remains nascent, with most claims driven by a handful of leading devices. For instance, AI devices for assessing coronary artery disease and diagnosing diabetic retinopathy have accumulated over 10,000 CPT claims. Adoption is considerably higher in zip codes with academic hospitals and those that are metropolitan and higher-income. The study also highlights how CPT code pricing reflects the value of AI, such as whether the devices reduce physician work or increase operating costs. Interestingly, reimbursement rates vary between Medicare and private insurers, with the latter potentially negotiating rates more aligned with actual costs and perceived value.

Read Paper | NEJM AI

3. Data Sharing. How to facilitate and streamline global medical-imaging data sharing in a grass-root approach?

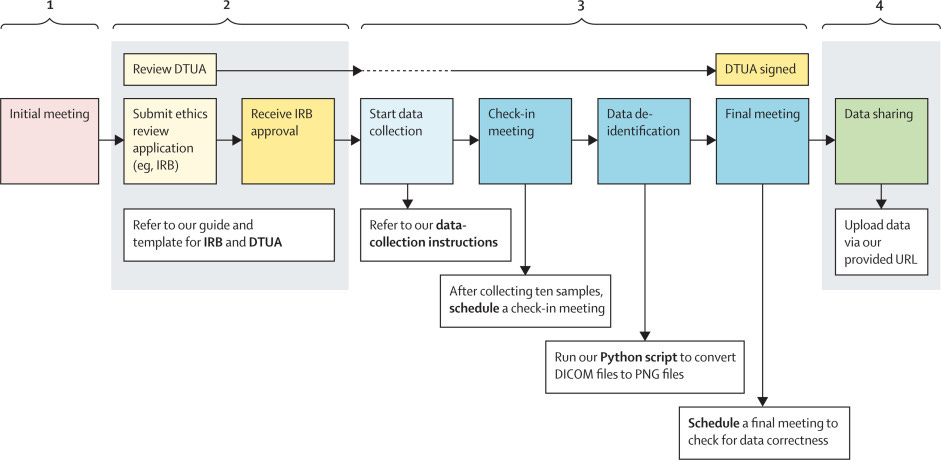

In this Comment, Saenz et al. introduce a framework for global medical imaging data sharing and share their experience in coordinating the Medical AI Data for All (MAIDA) initiative. MAIDA takes a grassroots approach, directly reaching out to and working with individuals at hospitals worldwide to collect representative chest X-ray datasets. The authors highlight the importance of providing templates to help these individuals obtain legal and ethics review approvals. They recommend allotting adequate time for approvals, as this is usually the most time-consuming step, often taking months. Because medical practices vary between institutions, the authors emphasize being flexible to accommodate differences rather than relying solely on standardized guidelines. Key recommendations include having comprehensive protocols and agreements ready, maintaining rigorous documentation for data quality and de-identification, but also adapting to individual institutional needs.

Read Paper | The Lancet Digital Health

4. Large Language Model. The Eliza effect is the tendency to falsely ascribe human-like qualities to conversational agents. This phenomenon poses risks of emotional manipulation for users. How can we counter anthropomorphism in large language models (LLMs)?

In this perspective, Shanahan et al. propose using the concepts of role play and simulation to understand the behavior of LLM-based dialogue agents. Framing dialogue agent behaviors as "role-play all the way down" allows us to make sense of phenomena like apparent deception and self-awareness without falling into the trap of anthropomorphism. The LLM-based dialogue agent is not a singular entity with beliefs and desires, but rather role-plays a distribution of possible characters that is refined as the dialogue progresses. Overall, the role-play perspective provides a useful framework for understanding agents without ascribing human characteristics they lack.

Read Paper | Nature

5. Ethics. 10 ethical principles for generative AI (GenAI) in healthcare.

Ning et al. conducted a systematic scoping review of 193 articles on the ethical considerations for using GenAI in healthcare. The analysis reveals gaps in the current literature, including a lack of concrete solutions beyond regulations and guidelines, insufficient discussion beyond large language models, and no consensus on a comprehensive set of relevant ethical issues for GenAI evaluation. To address these gaps, they propose the TREGAI checklist, a tool to facilitate transparent reporting of ethical discussions for GenAI research and applications.

Read Paper | arXiv preprint

-- Emma Chen, Pranav Rajpurkar & Eric Topol