Week 201

Obstetrical Sonography, Tactile-Based Gastrointestinal Screening, ClinAIOps, Brain Modeling

We are moving our newsletter to Substack for a better experience!

In Week #201 of the Doctor Penguin newsletter, the following papers caught our attention:

1. Obstetrical Sonography. While deep learning models have recently demonstrated human-level performance in fetal ultrasound image analysis, most prior studies were often retrospective, limited to Caucasian populations, and used fixed images rather than video.

Slimani et al. developed deep learning models to automate fetal biometry (FB) and amniotic fluid volume (AFV) assessment in ultrasound images for detecting potential fetal complications. The models were trained on 172K images from Morocco and public datasets, then tested on ultrasound scans from 172 pregnant patients across 4 centers in Morocco. The models achieved 95% limits of agreement with practitioners, exceeding human expert consistency. In order to make the automated assessment understandable and auditable, they separated the workflow into brain, abdomen, and femur segmentation, standard biometry plane classification, and quality criteria assessments following the guidelines of the International Society of Ultrasound in Obstetrics and Gynecology (ISUOG). Extracting the optimal biometric planes according to ISUOG criteria can produce results similar to expert sonographers, enable quality audits, and serve as a training tool for sonographers. The automated AFV assessment also reduced the workflow from more than 12 steps to just 3 for sonographers. These benefits could prove particularly useful in resource-limited regions such as Africa, where only 38.3% of fetal ultrasound operators have received formal training, and only 40.4% have taken a short theoretical course.

Read paper | Nature Communication

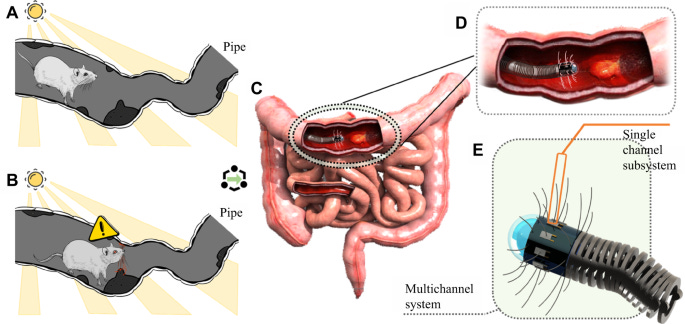

2. Tactile-Based Gastrointestinal Screening. Could we complement current vision-based gastrointestinal screening methods by integrating rat whisker-inspired sensors that utilize their natural texture recognition and distance perception capabilities for detecting polyps, mucosal disease, and other abnormalities on the gastrointestinal wall?

Wang et al. proposed a biomimetic artificial whisker-based hardware system with AI-enabled self-learning capability for endoluminal diagnosis. They tested an early single-whisker prototype, where a guitar string serving as the whisker is glued to a polyvinylidene fluoride film transducer that generates electric charges proportional to the stress when the film is subjected to vibrations or deformation. The raw signal is fed directly into a CNN- and LSTM-based model to detect 3 common biological tissues—normal tissue, ulcerative colitis, and ulcerative rectal cancer—by sweeping across the surface of a phantom designed to mimic the properties of the intestinal tract. A proof-of-concept experiment involving 120 trials for each tissue type using the phantom was conducted, with each trial simulating a complete screening sequence from the system’s first contact to leaving the object surface in hand-held states. This biomimetic artificial whisker-based hardware system demonstrated promising results with test accuracy up to 94.44% and 0.9167 kappa, demonstrating the potential to develop tactile-based systems for gastrointestinal screening applications.

Read Paper | npj Robotics

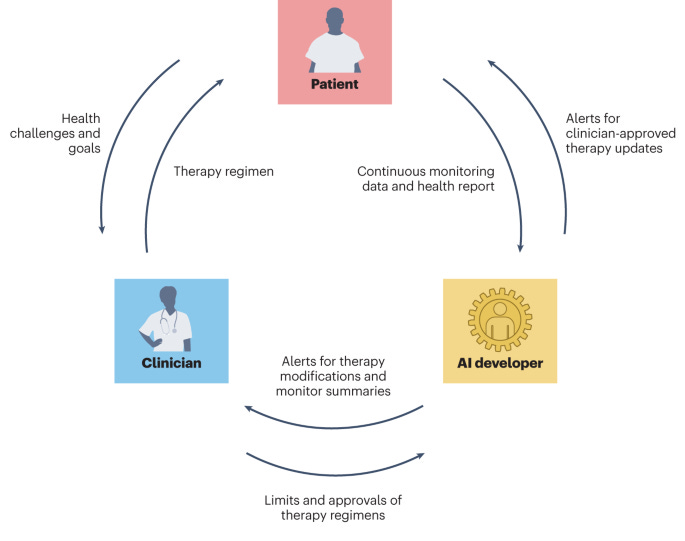

3. ClinAIOps. In software development, DevOps is an important practice to evolve and improve products quickly by integrating and automating software development (Dev) and IT operations (Ops). How could we borrow this concept to medical AI, so that we can operationalize model training/improvement, deployment, and monitoring in a complex, high-stake clinical environment that involves human stakeholders (patients, clinicians)?

In this Perspective, Chen et al. propose ClinAIOps, a framework to operationalize AI in clinical settings by coordinating stakeholders through feedback loops. In the context of continuous therapeutic monitoring (CTM), ClinAIOps enables patients to make timely treatment adjustments using AI outputs, clinicians to oversee patient progress with AI assistance, and AI developers to receive continuous feedback from patients and clinicians for quality assurance and model improvement. By tightening the links between patient health, therapeutic regimens, and clinical interactions, applying ClinAIOps in CTM can replace the infrequent, inaccurate, and delayed reporting of symptoms and events, allowing patients to focus on understanding the determinants of their health, and clinicians to deliver and evaluate patient-specific and evidence-based interventions. This may be particularly beneficial for patients with limited ability to self-report symptoms, with limited mobility or access to medical centers, or who experience difficulties in adhering to complex therapeutic regimens.

Read Paper | Nature Biomedical Engineering

4. Brain Modeling. With the growing body of brain data, could we build computational models that simulate brain activity?

In this Comment, Jain discusses how AI could help integrate diverse brain data sources like connectomes (maps of neuron connectivity and morphology capturing a static snapshot of a brain), functional recordings, and transcriptomics to build better computational models that simulate neural activity. Researchers could evaluate these models by comparing their predicted activity to recordings from biological systems. Compared to the current physics-based or anatomy-driven approach to brain modeling, these AI models have the advantage of reducing reliance on ever-more detailed measurements of individual components and making it easier to incorporate comprehensive data. However, key challenges remain around acquiring whole-brain multimodal data from the same specimens and agreeing on modeling targets and metrics (e.g. should models predict single neuron or whole-brain activity? What defines an accurate reproduction of biological neural activity?).

Read Paper | Nature

-- Emma Chen, Pranav Rajpurkar & Eric Topol