Week 192

Arteriovenous Fistula Stenosis, Medical Image Segmentation, Odor Map, Large Language Model

We are moving our newsletter to Substack for a better experience!

In Week #192 of the Doctor Penguin newsletter, the following papers caught our attention:

1. Arteriovenous Fistula Stenosis. Arteriovenous fistulas (AVFs) are critical for performing hemodialysis in patients with kidney failure. Screening for stenosis, one of the most common causes of AVF dysfunction, can improve the longevity of AVFs, reduce healthcare costs, and enhance patients' quality of life.

Zhou et al. developed a vision transformer (ViT) model to detect AVF stenosis from blood flow sounds recorded using a digital stethoscope. The sounds are converted to spectrogram images for ViT to classify the blood flow audio signal as patent or stenotic. ViT demonstrated superior performance over convolution-based neural networks (CNNs), which was likely because ViT is not constrained by the inductive biases of translational invariance and locality like CNNs, allowing it to explore the parameter space and find better generalizable rules more freely. Additionally, ViT maintains a global receptive field at every layer while convolutions only aggregate local information. The ViT model can automatically screen for stenosis at a level comparable to that of a nephrologist performing the physical exam. This could empower dialysis technicians with high patient volumes to ensure safety and streamline workflows for cost savings.

npj Digital Medicine

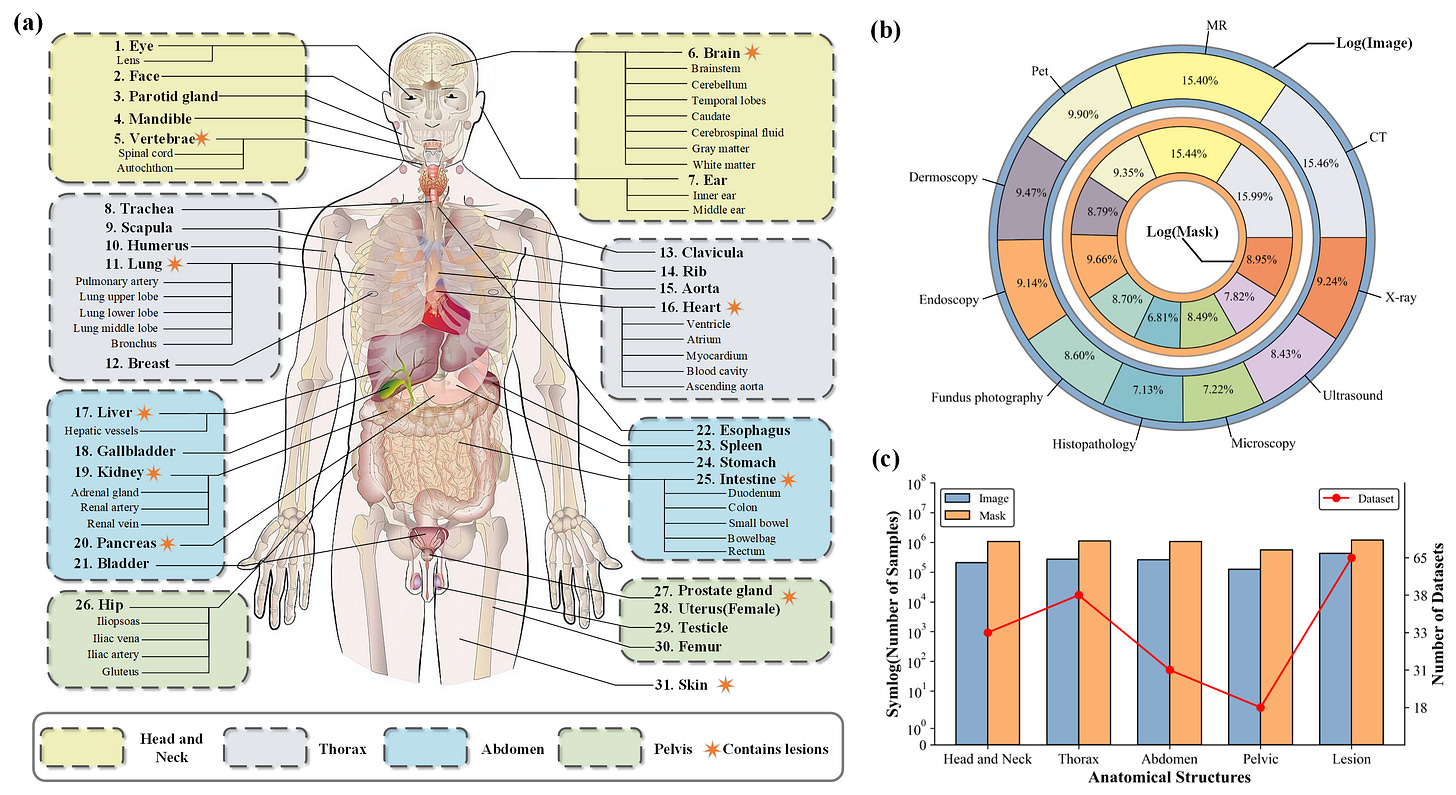

2. Medical Image Segmentation. The Segment Anything Model (SAM) represents a state-of-the-art research advancement in natural image segmentation. But due to the significant domain gap between natural images and medical images, directly applying the pretrained SAM to medical image segmentation does not yield satisfactory performance.

Cheng et al. developed SAM-Med2D for medical image segmentation by transferring SAM from natural images to the largest medical image segmentation dataset ever curated - with about 4.6M images and 19.7M masks from public and private datasets, including various imaging modalities (such as CT, MR, and X-ray), different organs, and multiple pathological conditions (such as tumors and inflammations). SAM-Med2D demonstrated (1) superior performance in handling complex organ structures, lesions, and cases with unclear boundaries, (2) a broad segmentation capability across bounding boxes, points, and masks, and (3) strong generalization ability, allowing direct application to unseen medical image data.

arXiv preprint

3. Odor Map. Mapping the physical properties of a stimulus to perceptual characteristics is a fundamental challenge in neuroscience. In vision, wavelength corresponds to color; in audition, frequency maps to pitch. However, the relationship between chemical structures and smell perceptions remains unclear.

Lee et al. developed graph neural networks to create a principal odor map (POM) that preserves perceptual relationships and enables odor quality prediction for previously uncharacterized odorants. Each odor molecule is modeled as a graph, where atoms are described by valence, degree, hydrogen count, hybridization, formal charge, and atomic number, and bonds are described by degree, aromaticity, and whether in a ring. This model performed as reliably as a human in characterizing smells. When prospectively tested on 400 new odorants, the model-generated odor profile matched the trained panel mean (the gold standard for odor characterization) more closely than did the median panelist. By applying simple transformations, the POM outperformed chemoinformatic models at various smell prediction tasks like odor detectability, similarity, and descriptor applicability, which suggests the POM successfully captured structure-smell relationships.

Science

4. Large Language Model. Recent reports on Large Language Model.

Beam et al. evaluated the performance of ChatGPT 3.5 on practice questions for the neonatal-perinatal board examination. They showed that ChatGPT 3.5 currently lacks the ability to reliably generate neonatal-perinatal board examination questions and does not consistently provide explanations that are aligned with the scientific and medical consensus.

JAMA Pediatrics

Strong et al. compared the performance of students vs ChatGPT 3.5 and ChatGPT 4 in clinical reasoning final examinations given to first- and second-year students at Stanford School of Medicine. They showed that ChatGPT 4 outperformed first- and second-year students on clinical reasoning examinations and had significant improvement over ChatGPT 3.5.

JAMA Internal Medicine

Pan et al. assessed the quality of responses generated by 4 AI chatbots (ChatGPT 3.5, Perplexity, Chatsonic, and Bing AI) to Google Trends’ top 5 search queries concerning the 5 most common cancers in the US (skin, lung, breast, colorectal, and prostate cancer). The chatbots generally produced reliable and accurate medical information about the cancers, but the responses were written at a college reading level and were poorly actionable.

JAMA Oncology

Chen et al. evaluated ChatGPT 3.5’s performance to provide cancer treatment recommendations concordant with National Comprehensive Cancer Network guidelines. They showed that the model did not perform well at providing accurate cancer treatment recommendations, and it was most likely to mix in incorrect recommendations among correct ones - an error difficult even for experts to detect.

JAMA Oncology

Bernstein et al. compared human-written and ChatGPT 3.5-generated responses to 200 eye care questions from an online advice forum. ChatGPT 3.5 appeared to be capable of responding to long user-written eye health posts and largely generated appropriate responses that did not differ significantly from ophthalmologist-written responses in terms of incorrect information, likelihood of harm, extent of harm, or deviation from ophthalmologist community standards.

JAMA Network Open

-- Emma Chen, Pranav Rajpurkar & Eric Topol